By J.P Massar

Oakland Privacy members provided the impetus for the showing of ‘National Bird’ at the OMNI Commons on November 19th. It is the story of three service members enmeshed in the US government’s drone war; its effect on their lives both during and after they were enlisted; and a look at the cruel toll it takes on the people, the war targets, their families and their neighbors. It’s a good production, and you should see it. (It’s available on Netflix, Amazon, Itunes and VUDO: http://nationalbirdfilm.com/).

The reality it depicts is frightening. But it’s not nearly as frightening as what seems likely to come.

Some say that armed drones are just another aspect of a multitudinous deadly arsenal. Others say the whole idea of raining unsuspecting and anonymous death from the sky is repugnant, terror disguised as a tactic, and should be equivalent to biological and chemical warfare: e.g., “outlawed” (as if war ever really knew real boundaries).

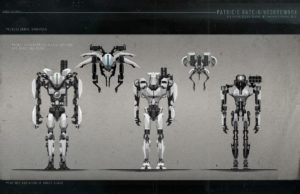

But perhaps the most especially frightening aspect of drone warfare is that it is the vanguard of something that science fiction writers have been warning us about for most of the last century – autonomous devices that can wage war on their own, deciding what, and who, is and is not a target or a threat.

While the film describes drones controlled by human beings, albeit half a world away, it alludes to the military’s desire to make them autonomous (C.f. Autonomous Military Drones No Longer Science Fiction. If a car can become self-driving (and it seems almost certain that it can), then various different types of mobile robots armed with weapons can similarly be trained to make decisions on their own on a battlefield, be it on land, air, the ocean or in space. In fact, it seems unlikely that the military is NOT working feverishly on such as you read this, with little regard for the ethical and philosophical concerns such devices raise.

It’s bad enough to think of human controlled swarms of bee-like mini-robots attacking individuals or fleets of semis – controlled by people with the same mentality as our seemingly endless supply of mass shooters – rampaging the Interstates. But to have them set loose on their own – with unknown programming and who-can-really-say what high level goals assigned to them (whether by US authorities in a foreign land to “protect our interests” or vice versa – by a foreign power in the US, or by our own police on crowds of protesters) is enough to long for the days when the only computers were human (en.wikipedia.org/wiki/Human_computer).

Unfortunately, nostalgia doesn’t undo technological progress; science fiction tends to become science fact; and ethics plays little, if any, role in what is actualized from theory. While iInfluential people like Stephen Hawking, Elon Musk and Steve Wozniak have already urged a ban on warfare using autonomous weapons or artificial intelligence, it seems unlikely their call will be heeded, any more than calls to destroy nuclear weapons have been taken seriously by people in power.

So how do we get out of yet another fine mess we’ve gotten ourselves into? I’m out of ideas, but the answer probably isn’t going to be delivered from the sky.